double dissent

-

How to add two vectors, fast

I’ve been building a Llama 3.2 inference engine aptly named forward (purely as an excuse to stop procrastinating and learn modern C++ and CUDA), from scratch. Were I reasonable, I would not have decided to build a tensor library first, then a neural network library on top of it, and finally the Llama 3.2 architecture as the cherry on top. But here we are. The first milestone was getting Llama to …

-

You are not the code

It was 2016. I was listening to Justin Bieber's Sorry (and probably so were you, don't judge), coding away at my keyboard in an office building in Canary Wharf, London. We were a small team working on a complex internal tool for power users. Our tools were Clojure and ClojureScript, which we wielded with mastery and pride. The great thing about building for advanced users is that they are as obses…

-

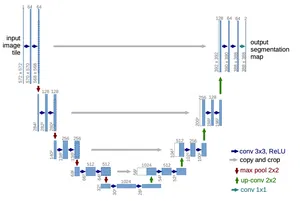

Reproducing U-Net

While getting some deep learning research practice, I decided to do some archaeology by reproducing some older deep learning papers. Then, I recalled (back in my fast.ai course days) Jeremy Howard discussing U-Net and the impression it had on me—possibly the first time I encountered skip connections. So I read the paper and wondered: Is it possible to reproduce their results only from the paper, a…